For every online entity, improving their SEO rankings is holds primary value. They need to shine in the crowd, and want to lure Search Engines for better ratings. Search Engines rely on bots for crawling websites. These crawlers determine the page’s outlook, and ultimately impact the rankings. Whenever a bot such as the Google crawler, or Bing bot, among others, craw through your site through a link or sitemap, they check and index all the links present there.

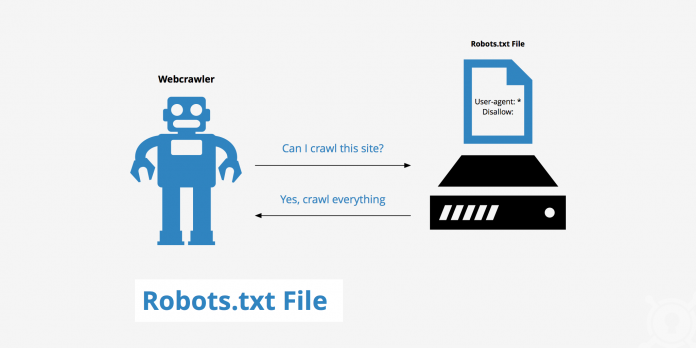

Robots.txt, along with Sitemap.xml is at the root of the web’s domain. For a new website, with limited content, crawlers will easily maneuver and crawl through the content. The problem begins when the website is big, with a lot of pages, links and data present. Crawlers have a limited time to crawl through each website. Chances are due to shortage of time, the crawlers only go through a few pages, and miss out on the important ones. Ultimately, your rankings are affected. A robots.txt file guides the search engine bots which URLs they can access on a website. It is helpful for managing the crawler traffic on the website, and avoiding a specific file from getting noticed by Google or other search engines.

Robots.txt file is for stopping the crawlers from searching particular parts of your page. Whenever a bot comes to your blog for indexing, it will follow the Robots.txt file.

How is a Robotx.txt File Generated?

Robots.txt is a general text file. You do not need any specific software for creating a robots.txt file. All you need to do is open any editor on your computer, such as Notepad, or Word document, and create the Robots.txt file as per requirement. Each record you will create holds important information.

Example: User-agent: Googlebot

Disallow: /cgi-bin

Protocols Used in Robots.txt Files

Protocol is a type of format used for giving commands, or providing instructions. For Robots.txt files there are a few protocols that are used. The primary protocol is the Robots Exclusion Protocol. The REP tells the bots the pages or resources to avoid. Other protocols used are Sitemaps that tell the Robots.txt file which pages the bots can crawl.

Understanding User Agent

Each Robots.txt file begins with Useragent>

Example

User-agent: *

Disallow: /_esa

Disallow: /__mesa/

Every person or program on the internet will have a “user agent”, also known as assigned name. For individuals, it comprises the information such as browser type, and operating system version. However, personal information is not included. This information or assigned name lets the websites show content that is compatible with the user’s system. For the crawlers, user agent helps the website in determining the type of bots that are crawling the website.

Website admins provide specific set of information for bots by writing different instructions for bot user agents. Common search engine bot user names include:

- Googlebot

- Googlebot-Image

- Googlebot-News

- Googlebot-Video

- Bingbot

- MSNBot-Media

How Does a Disallow Command Work?

As you might have seen in the examples, the Disallow command is the most prevalent one in REP. The Disallow commands tell bots which pages to access. These prohibited webpages are mentioned after the disallow command. These pages are not always hidden. They consist of such pages that hold no value for Google or Bing user.

What is an Allow Protocol?

The allow protocol makes it possible for the crawlers to reach a particular page, while disallows the rest of the pages. Allow protocol is recognized by a few search engines.

What is a Crawl Delay?

The drawl delay command stops the spider bots from overtaxing your server. It allots the time to the bot for waiting between each request. The timeframe is in milliseconds.

Crawl-Delay: 8

It means the crawl delay should be of 8 milliseconds.

Google does not recognize this command, but Yahoo, Bing and other search engines do.

What is a Sitemap Protocol?

The Sitemap protocol helps the spider to know what to include in their crawling. It is a machine-readable list of all the pages present on the website. It ensures that the crawlers do not miss anything important in the website. However, the Sitemap protocol does not force the bots to prioritize a certain page more than the other.

Now, there are a few rookie mistakes that you need to be diligent about:

- Do not use comments in the Robots.txt file. Also do not leave any space in the beginning of each line.

- Do not alter the rules of the command.

- User upper case and lower case letters properly. If you want to index a “Download” directory but write “download” on Robots.txt file, the program will mistake is for a search bot.

- If you don’t want the crawler to index more than one directory or page, avoid writing along with thee names. Such as Disallow:/support/images.

Instead go for:

Disallow:/support

Disallow:/images

- Use Disallow: / if you don’t want to index any page of your website.

Do Not Forget to Block Bad SEO Bots!

Competitors use bots such as SEMRUSH, Majestic and Ahrefs etc. to crawl through websites and steal secrets for adding value to their site. It is important for you to be aware of these bots and block them to protect yourself. Here is what you can use on robots.txt to block SEO agents:

User-agent: MJ12bot

Disallow: /

User-agent: SemrushBot

Disallow: /

User-agent: SemrushBot-SA

Disallow: /

User-agent: dotbot

Disallow:/

User-agent: AhrefsBot

Disallow: /

User-agent: Alexibot

Disallow: /

User-agent: SurveyBot

Disallow: /

User-agent: Xenu’s

Disallow: /

User-agent: Xenu’s Link Sleuth 1.1c

Disallow: /

User-agent: rogerbot

Disallow: /

# Block NextGenSearchBot

User-agent: NextGenSearchBot

Disallow: /

# Block ia-archiver from crawling site

User-agent: ia_archiver

Disallow: /

# Block archive.org_bot from crawling site

User-agent: archive.org_bot

Disallow: /

# Block Archive.org Bot from crawling site

User-agent: Archive.org Bot

Disallow: /

# Block LinkWalker from crawling site

User-agent: LinkWalker

Disallow: /

# Block GigaBlast Spider from crawling site

User-agent: GigaBlast Spider

Disallow: /

# Block ia_archiver-web.archive.org_bot from crawling site

User-agent: ia_archiver-web.archive.org

Disallow: /

# Block PicScout Crawler from crawling site

User-agent: PicScout

Disallow: /

# Block BLEXBot Crawler from crawling site

User-agent: BLEXBot Crawler

Disallow: /

# Block TinEye from crawling site

User-agent: TinEye

Disallow: /

# Block SEOkicks

User-agent: SEOkicks-Robot

Disallow: /

# Block BlexBot

User-agent: BLEXBot

Disallow: /

# Block SISTRIX

User-agent: SISTRIX Crawler

Disallow: /

# Block Uptime robot

User-agent: UptimeRobot/2.0

Disallow: /

# Block Ezooms Robot

User-agent: Ezooms Robot

Disallow: /

# Block netEstate NE Crawler (+http://www.website-datenbank.de/)

User-agent: netEstate NE Crawler (+http://www.website-datenbank.de/)

Disallow: /

# Block WiseGuys Robot

User-agent: WiseGuys Robot

Disallow: /

# Block Turnitin Robot

User-agent: Turnitin Robot

Disallow: /

# Block Heritrix

User-agent: Heritrix

Disallow: /

# Block pricepi

User-agent: pimonster

Disallow: /

User-agent: Pimonster

Disallow: /

User-agent: Pi-Monster

Disallow: /

# Block Eniro

User-agent: ECCP/1.0 ([email protected])

Disallow: /

# Block Psbot

User-agent: Psbot

Disallow: /

# Block Youdao

User-agent: YoudaoBot

Disallow: /

# BLEXBot

User-agent: BLEXBot

Disallow: /

# Block NaverBot

User-agent: NaverBot

User-agent: Yeti

Disallow: /

# Block ZBot

User-agent: ZBot

Disallow: /

# Block Vagabondo

User-agent: Vagabondo

Disallow: /

# Block LinkWalker

User-agent: LinkWalker

Disallow: /

# Block SimplePie

User-agent: SimplePie

Disallow: /

# Block Wget

User-agent: Wget

Disallow: /

# Block Pixray-Seeker

User-agent: Pixray-Seeker

Disallow: /

# Block BoardReader

User-agent: BoardReader

Disallow: /

# Block Quantify

User-agent: Quantify

Disallow: /

# Block Plukkie

User-agent: Plukkie

Disallow: /

# Block Cuam

User-agent: Cuam

Disallow: /

# https://megaindex.com/crawler

User-agent: MegaIndex.ru

Disallow: /

User-agent: megaindex.com

Disallow: /

User-agent: +http://megaindex.com/crawler

Disallow: /

User-agent: MegaIndex.ru/2.0

Disallow: /

User-agent: megaIndex.ru

Disallow: /

Be sure that Your Content is not affected by New Robots.txt File

Sometimes, inexperienced programmers do not consider the impact updating Robots.txt file has on other content. You can use Google search console to see whether the content is being accessed by Robots.txt file. Just Login to the Google search console select the site, and run the diagnosis by selecting Diagnostic, and Fetch as Google.

Conclusion

Robots.txt is important for everyone trying to excel in their SEO strategy. The protocol gives guidance to the search engine bots to save them time, and energy. It also helps the bots to evade certain pages that might impact the SEO rankings negatively. Think of the Robots.txt file as a set of instructions for the bots. It is included in the source files and are intended of managing the activities of bots. The Robots.txt file cannot impose the instruction on the spiders, only tells them what to do. Think of the files as a set of rules for behaving in a restaurant. No one can impose those rules on the people coming into the restaurants. Only the responsible customers or citizens will follow the rules. Same is the case with Robots.txt files. A good bot will follow its instructions.

Many newbies and inexperienced web designers end up making mistakes, which hamper the growth of their website, decrease their rankings and confuse the bots. These mistakes include spellings mistakes, misuse of upper and lower case letters, and even alter the rules of the commands. It is crucial for you to understand that just like every language, computer language and programming follows a certain jargon, which you need to follow religiously.

Once you wrap your head around Robots.txt files, your Blog and website can benefit massively from it.